Welcome to InnovatingAI

InnovatingAI pioneers next-generation LLM-powered agents that redefine intelligent automation and decision-making.

Get StartedAbout

What is InnovatingAI?

InnovatingAI develops advanced LLM-powered agents that enhance automation and decision-making through data science frameworks and problem-solving benchmarks, bridging AI theory with real-world applications.

InnovatingAI pioneers next-generation agents with four core features:

Learn moreBenchmark

Next-generation evaluation systems leveraging automated agent interactions to create adaptive testing environments that evolve with AI capabilities, measuring both performance and creative problem-solving

Agent

Autonomous systems combining reasoning, planning and tool-calling architectures that enable dynamic task decomposition and multi-agent collaboration for complex problem-solving

Resource

Intelligent resource orchestration frameworks that optimize compute allocation, memory utilization and energy efficiency across distributed AI workloads through real-time monitoring and adaptive scheduling

Model

Meta-learning architectures featuring self-optimizing neural networks that continuously refine their parameters through reinforcement learning loops and performance feedback mechanisms

Benchmark

Task 1: atposTask 2: belkaTask 3: OAG

InnoGym: Benchmarking the Innovation Potential of AI Agents

- Real-World Tasks: Authentic challenges from multiple domains requiring creativity and planning

- Research-Level Challenges: Tasks requiring reading and implementing cutting-edge research papers, eliminating web-sourced solutions through novel problem design

- Industrial-Grade Validation: Multi-stage evaluation from basic implementation to full research paper generation with rigorous code verification

Agent

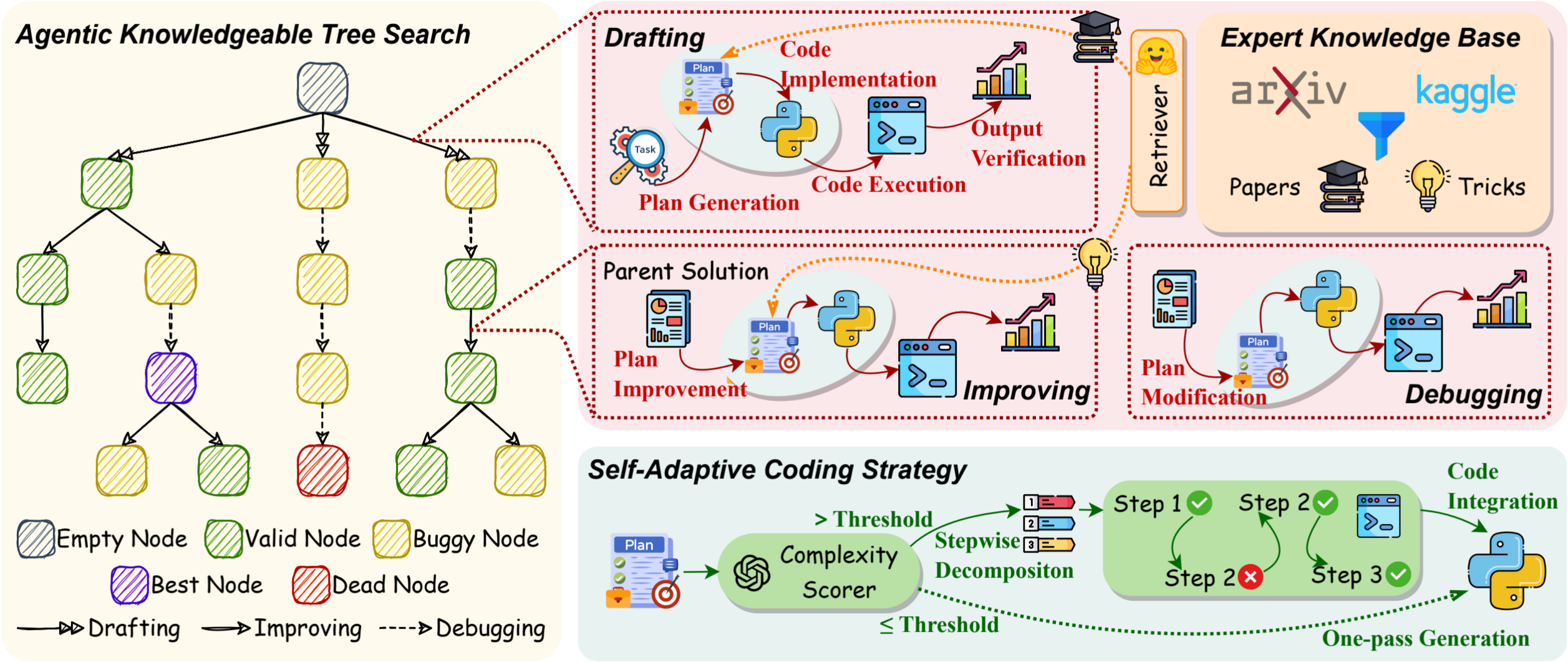

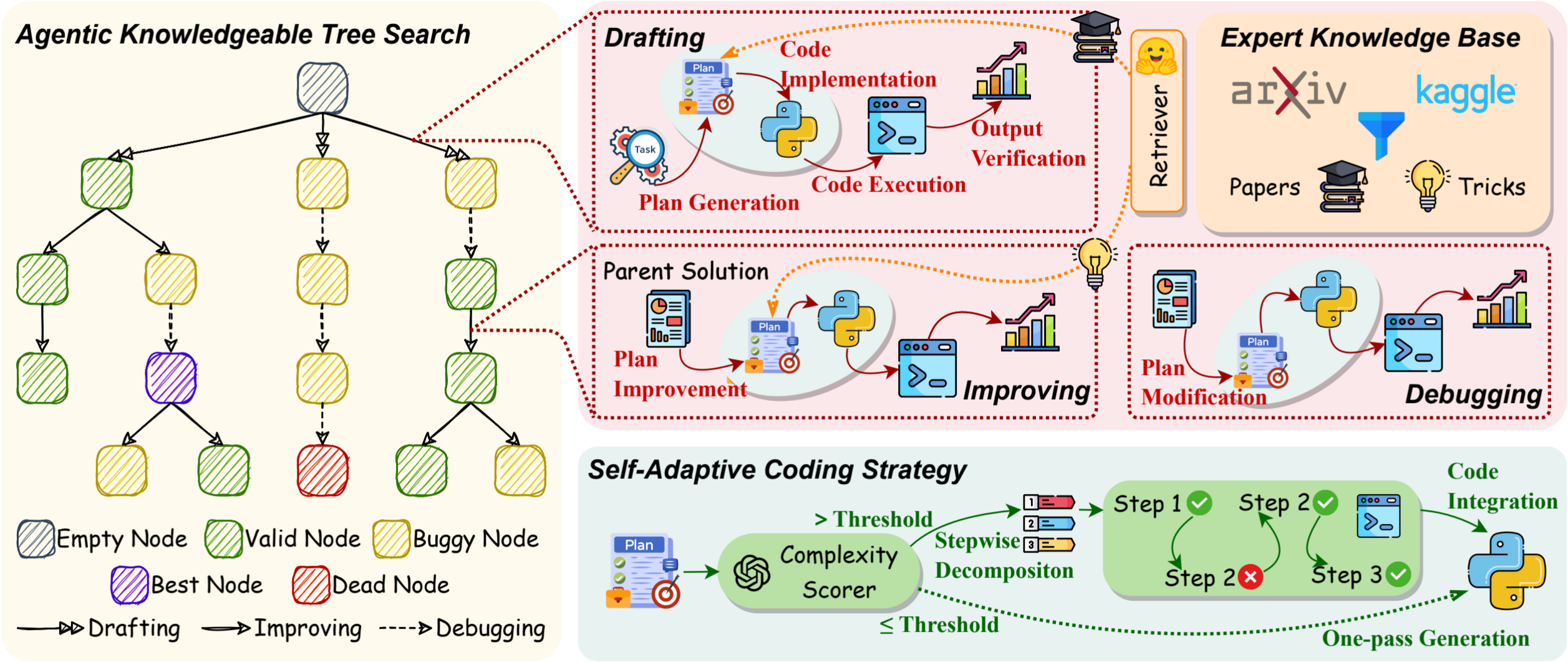

AutoMind: Adaptive Knowledgeable Agent for Automated Data Science

Revolutionizing automated data science with domain expertise integration and adaptive problem-solving.

- Expert Knowledge Base for Data Science: Curated domain expertise from Kaggle competitions and academic papers, enabling complex ML task solving with human-level insights

- Agentic Knowledge Tree Search Algorithm: Hierarchical knowledge-guided exploration of solution spaces, repesenting an improvement of 13.5% over the prior state-of-the-art (SOTA) on MLE-Bench

- Self-adaptive Coding Strategy: Dynamic code generation that automatically adjusts to task complexity, reducing token costs by 63% compared to prior SOTA

Resources

Essential tools for your projects

Resource 1

Essential coding utilities for your projects

- Code formatting and linting

- Version control integration

- Debugging assistance

Resource 2

Curated datasets for testing and development

- Multiple file formats

- Clean and structured

- Ready to use

Resource 3

Step-by-step tutorials and examples

- Beginner to advanced

- Practical examples

- Regular updates

Model

| Weight Quantization | Backend | Prefill (tokens/sec) | Decode (tokens/sec) | Time to first token (sec) | Model size (MB) |

|---|---|---|---|---|---|

| dynamic_int4 | CPU | 118 | 12.8 | 9.2 | 4201 |

| dynamic_int4 | GPU | 446 | 16.1 | 15.1 | 4201 |

The table above demonstrates the performance metrics of our model across different hardware backends. The dynamic_int4 quantization provides optimal balance between model size and inference speed.

Key observations: GPU backend significantly improves prefill speed (3.8x faster than CPU), while maintaining comparable decode performance. The consistent model size confirms proper quantization.

Publications

Cutting-edge frameworks advancing automated data science and AI innovation benchmarking

Team

Meet our dedicated team of AI researchers and engineers driving innovation forward

Ningyu Zhang

Leading expert in LLM-powered agents and automated data science frameworks

Da Zheng

Pioneering research in multi-agent collaboration and intelligent automation systems

Yixin Ou

Specializing in meta-learning architectures and performance optimization

Jingtian Zhang

Expert in distributed systems and intelligent resource orchestration

Yujie Luo

Research focus on intelligent automation and AI system optimization

Jingsheng Zheng

Advancing research in machine learning and computational intelligence

Acknowledgments

Our Sincere Thanks

We would like to express our sincere gratitude to AIDE and MLE-Bench for their significant contributions to our project. Their work has been invaluable, and we are truly grateful for the opportunity to build upon their open-source implementations.

We also deeply appreciate the continued support and collaboration from our community. A special thank you to all who have contributed by reporting issues and sharing their technical expertise - your efforts have played a crucial role in our project's development and success. 🙌